4 Steps for Supporting Faculty with GenAI

A look at how I work with faculty to guide them to feel more comfortable and able to engage with GenAI

Quick reminder of upcoming events! Join me at these two in-person events coming up: I’ll be the keynote for the New England Faculty Development Consortium Fall Conference on Friday, October 18th at College of the Holy Cross in Worcester, MA and a day-long event with NERCOMP, Generative AI and Higher Education - In-Person Workshop on Wednesday, November 20th at College of the Holy Cross in Worcester, MA. I’m also doing this virtual NERCOMP webinar, Stand Up, Stand Out: Mastering Public Speaking in Higher Education on Wednesday, October 9th at 11am (EST).

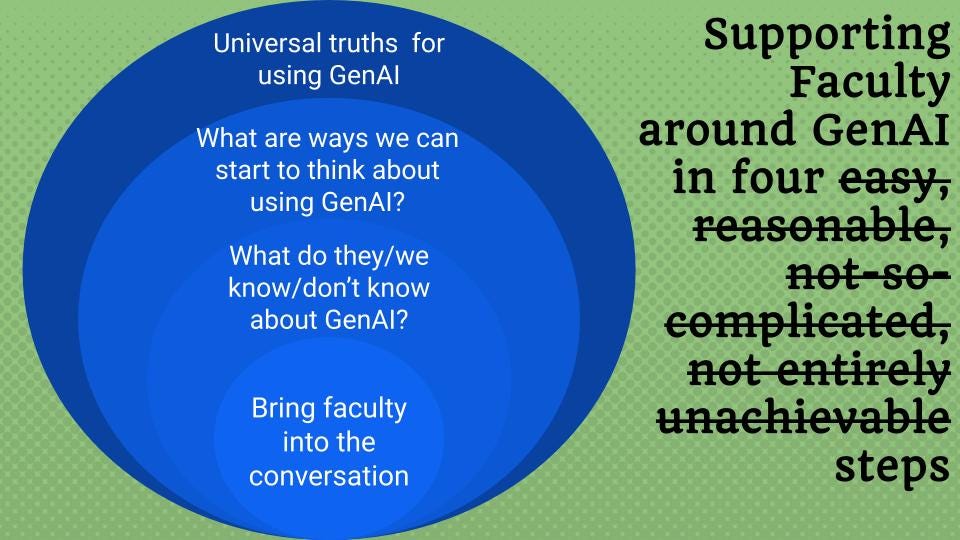

The 4 Steps at a Glance

Recently, I did a very meta thing. I gave a talk to folks in a center for teaching and learning about how we talk with faculty around thinking, navigating, and engaging with generative AI. This post is an edited version of that talk wherein I mark out (from my experience) what are the important moves and approaches to supporting faculty in this space as we continue to make sense of generative AI in the teaching and learning landscape.

I do think that what I figured out in talking with faculty about generative AI comes from a place of experience, expertise, and experimentation and, of course, I don't want to pretend that anything that I'm going to discuss here is somehow new or fundamentally different from what many of you may already be doing. Yet, I do think my particular arrangement and emphasis have proven to be helpful and may benefit others. This work comes from somebody who has been professionally grappling with education and technology for 15 years and has been finding his way through these spaces sinfe the 1990s when I stumbled onto two pivotal educational technologies: audiobooks and the internet.

What does it mean to support faculty around generative AI? I'm going to briefly sketch out what are my four steps, and then we'll go into further depth with each of them. These four steps help situate faculty in their discomfort, confusion, or assuredness so they can consider different ways of settling in with AI. While these steps are presented in a linear fashion in a way that I think will make sense, they can also be interwoven as I have often done.

The first step is bringing faculty into the conversation. Folks want to have the conversation, but there's a lot of tension and we have to ease into it. Some folks are deep into using it. Others are deeply concerned students using it. Others are just deeply concerned that generative AI exists. If you ask 10 faculty how they feel about generative AI, you'll come up with 30 different responses, and if you ask them 20 minutes later, you'll have 50 new ones. There’s so much packed into this technology that creates this wide spectrum of feels. Acknowledging that is the first step in all this.

The next step is determine what do we/they know/don't know about generative AI. This step creates some norms and basic grounding about what it is and what it isn't. That's a mixture of threshold concepts and also clarifying what we know in the moment because what we know actually changes. The AI that we had in November 2022 has different affordances than the AI that we have today.

The third step is what are the ways we can start to think about using generative AI? This is giving faculty some buckets to think about how to think about it, and it's one of the most useful things we can do. Trying to think about what AI means for teaching and learning can be a bit meta for all of us. So, starting with contexts that help them explore provides tangible value.

And then finally, there are the universal truths for using generative AI. These aren't real universal truths, but they're clear, simple, direct ideas about generative AI that can create some of those key "aha" moments that we need to start thinking about generative AI.

Let’s dig a bit deeper with them!

Step #1: Bringing faculty into the conversation

One of my favorite activities that I love to do when working with faculty is to start off almost every conversation with some kind of icebreaker. That icebreaker is usually, "What is generative AI, but wrong answers only?" (as in the image above). It's an activity that loosens up the tension. It gets faculty to laugh in the face of whatever this is or to just poke fun at it enough to get through some of the discomfort.

My friend and co-author, Esther Brandon, also likes to do what she calls an "airing of the AI grievances" in true Festivus tradition. Because there's a lot of existential weight and tension that comes with generative AI, stepping into it with a bit of levity, curiosity, and norming can be incredibly powerful to get folks to breathe and feel a bit more settled.

Once we’ve punctured that tension bubble (at least a little), I move into a series of questions. These questions are really just taking a pulse and getting a sense of where people are and what they're doing with generative AI.

I do this as publicly as possible, meaning that I want folks to get a sense of what their peers are doing, what they're looking at, how they're feeling. I might do this as a virtual poll, a show of hands, comments in the chat. The idea is help folks see where they are and who else is there.

This approach also allows for a bit more conversation and back-and-forth, which is a good practice and demonstration with faculty about how to talk about generative AI with students. Also, it allows for sharing and learning from one another, which is something I hammer throughout my talks and workshops: the answers don't sit in one of us, but all of us, and the crowd is always smarter than the individual. We can only figure this out collectively, not individually.

I always start with getting a sense of how ambivalent do people feel about using generative AI? I move into asking questions about how they may be using it personally or professionally. Are they using it to create course content or course materials? Are they using it with students? These questions largely just unpack where people are, but as importantly, they let people know that there are others either similar or different who are here figuring this out too. This easing is also just acknowledging to the community that this range is okay and expected.

I find that this as a first step—before anything else, helps faculty feel seen by acknowledging their issues and concerns about generative AI. I don't think we can have these conversations with faculty without taking a moment to validate and recognize the weight that generative AI represents.

It's something I've been saying since the very beginning: we cannot ignore or not give space, even to those folks that are deep into generative. We can't move forward without actually just taking a breath, without actually acknowledging this challenges many of us in many different ways. There's some excitement, there's some enthusiasm, some really cool stuff that potentially is on the horizon, and there's a lot more complicated stuff. There are a lot more challenges that are all being foisted on faculty shoulders.

Just like our students, who can't engage in learning until some of their essential needs are met, I think intellectually our faculty will not engage until their psychological needs are met. Without some validation or recognition, it's just a lot.

Step #2: What do they/we know/don’t know about GenAI?

I then move into trying to clarify what is and isn't true of generative AI, and of course, this is really tricky. I use this space to build some confidence in faculty, challenge some of the whispers out there, and help folks better understand how generative AI works.

Typically, it’s a series of true-false statements about generative AI. Many of these may seem obvious but it allows us to dig into the idea behind each and consider why some folks think answers are a certain way. And again, it invites conversation and curiosity. It allows us to start at the surface and then wade into things more. It also allows us to grapple with the truths we have to deeply understand and also falsehoods that keep us from looking at it in different ways.

One major goal in this section is wrapping our heads around the challenging and complex ways that AI and the discourse of AI seduces and confuses us. Unlike other technologies that are often convoluted (ahem, metaverse, here’s looking at you!), generative AI is incredibly easy to use. It comes to us in the form of a chat box. If you've been on the internet for any time, you know how to use a text box. I can go back to my days on AOL chat or IRC in the 1990s—it’s a central feature of Internet life. The creators of generative AI took a complex technology and gave it to us in a really simple package—and that’s what makes it so seductive. You can start using it in seconds; you can’t say the same with other cutting-edge technology.

This part also explores questions about AI sentience, questions around how generative AI "thinks" or how generative AI arrives at its answers, which is different from thinking. All of this culminates in helping folks to understand generative AI is not aware of meaning, not aware of its answers. It does not think about its answers; it calculates its answers. The meaning comes in when somebody reads it and makes decisions about what it means. The generative AI is just calculating and this is something we need students to better understand as they use it.

When you give AI a prompt to create a poem - it's not a poem. This is one of those areas where we really have to understand within human meaning-making, it is not a poem until somebody comes in and reads it and declares it a poem. On its own, it is simply a calculation of tokens. So, really trying to emphasize that, trying to emphasize the agency that we give over to generative AI when we start to talk about being able to lie versus calculating inaccurate answers or when we talk about it hallucinating. This is the language of agency that we're giving over to these tools, and with it, our ability to really evaluate and understand the answers or the responses that it's giving.

We also explore how generative AI will show up in our industries. It may look differetn but it is also siilar to how the internet has infiltrated every industry and discipline. We talk about the extreme limitations of generative AI detectors (of course, you can read more with this series of posts: Post #1, Post #2, Post #3).

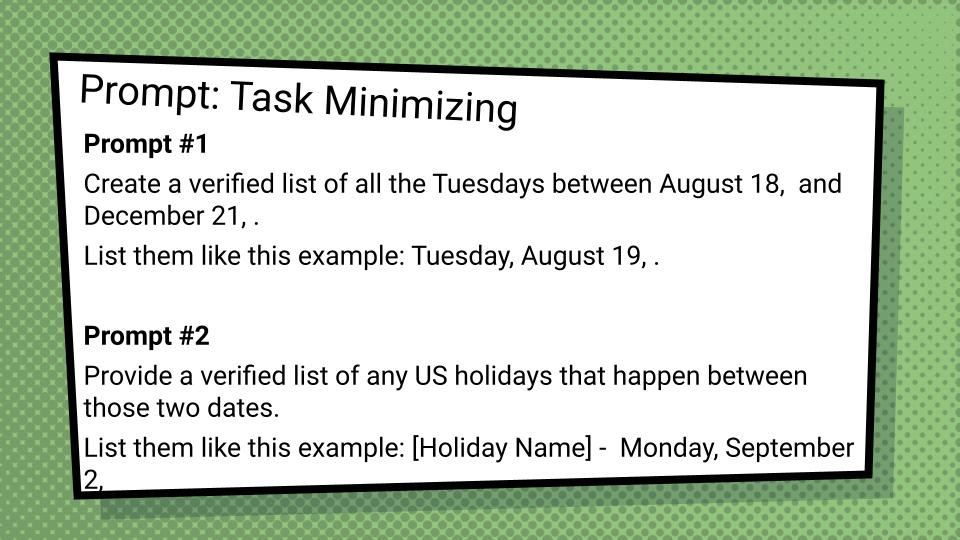

Step #3: What are ways we can start to think about using GenAI?

At this point, I’ve validated their concerns and done some norms and truth-busting, myth-busting around generative AI. We can then actually start talking about what are some of the ways to clearly use it.

In this space, I provide some categorical uses, examples of each category, and a prompt library that reinforces this structure. Now, the structure is more folksonomy than taxonomy. There's nothing scientific or perfectly clear about these categories, but they do provide a beginning means for faculty to think about the category of work they want to use generative AI for and what might be ideal prompts to start with. The categories aren't perfect, but they help to compartmentalize the wide range of uses of generative AI before them.

There's just something about AI that is like the streaming service challenge, or the 500 cable channels problem. It's the paradox of choice coupled with the ways AI lead us to think differently about what we’re asking for (so maybe it’s the paradox of choice meets the unintended consequences of wishes problem?). My intension here is to give them confined choices and examples of successful wishes so that they can abstract from there. It supports faculty in building their own internal framework for ways to work with generative AI.

I move these categories which include efficiency and brainstorming, content creation, data and info processing, and then communication. Again, you can certainly see overlaps among these, and there are probably categories that I'm not including that you certainly could see, but it's just giving the structure that allows them to feel like they have starting points.

I structure each category as such. I explain the category, provide some examples of what this category might look like, and then a specific prompt and its results that demonstrate the category well. When I share the prompt and its results, there are always done prior to and the answers copied rather than doing a live demo, because I find those can just be a time-eater without the payoff—especially if there are technical problems with the tool.

When talking about Efficiencies as a category, I’m point to work that is often work that has to be done and some of that work, has no intrinsic value. For instance, getting the list of dates to put into the syllabus. Therefore, I share this prompt that many faculty find incredibly useful in reducing the task of getting the relevant dates:

Prompt #1

Create a verified list of all the Tuesdays between August 18, and December 21, .

List them like this example: Tuesday, August 19, .

Prompt #2

Provide a verified list of any US holidays that happen between those two dates.

List them like this example: [Holiday Name] - Monday, September 2,

Step #4: Universal truths for using GenAI

In guiding faculty to this point, where they are interested in using generative AI, it’s now possible to discuss some universal truths about its use. Again, these aren't quite universal truths, but they are the clearest, simplest, and most important things that I find essential for engaging with generative AI. Maybe we could call them founding practices. To me, these are the "now you know" moments of the 1980s cartoons and after-school specials.

These are typically just a mixture of important ideas to think about in using and how we use generative AI. I often start with the fact that abstinence is not a strategy. Avoidance has never served us; it certainly hasn't done us well in society. It hasn't worked in Prohibition, in the War on Drugs, and in abstinence-only sex education. It just doesn't work. It's not valuable in ways that are going to be helpful for other faculty, for colleges and universities, and for our students. It’s not a charge to use it extensively or without regard but to recognize its presence and consider what and how it is likely to be a part of learning, formal or informal, whether we want it or not.

I also discuss the two-bucket challenge. I have discussed the two-bucket challenge in a previous post as such:

The first bucket is a working understanding of how generative AI works; its limitations, its capabilities, its biases, and how it fits into the larger cultural landscape of late-stage capitalism.

That’s essential to knowing what it is and isn’t, can and can’t do–but more importantly, sifting through the promises of a lot tech-bros in Silicon valley and business hype about a techno-utopia that always results in buying a product to solve all our woes.

The second bucket is a deep knowledge of the subject matter you’re working with it on. It just doesn’t know things; it only calculates responses and that can only get one so far. When it comes to disciplinary knowledge, it still falls drastically short. Yes, it can produce facts but it often can’t produce knowledge.

As leaky buckets, we have to continue to re-immerse ourselves in the changes that regularly occur in AI and our disciplines.

I emphasize the need for us to collectively figure this out; that figuring this out should not sit with any individual person.

I push them to really find a use case that works for them, even if they are against generative AI. I want them to find a use that is an "aha" moment for them. They don't have to continually use it, but they have to find a good use case if they're going to understand and appreciate the ways others are using this. It can't just be superficial. In other words, it can't just be, "Oh, I see it does this." It has to be something that they feel is actually valuable to them. This is a key means to build both empathy and understanding about how others are going to use this, whether individual faculty want to use it or not. If you can understand how this tool can feel transformative to others through your own experience, it can help us move away from the antagonistic challenges that arise towards our students with such technologies.

There are also the practical ways of using generative AI. For instnace, always asking it what it's missing in its answers. This is a low bar but essential thing that always yields more results. It strikes many folks as both obvious and something they would not have thought to do.

I encourage folks to think about how to flip the script with generative AI and turn it into the interviewer. That's where I think some of the most powerful ways of using it are. When it interviews the user or pushes their brainstorming, I think it helps faculty and students realize that it does not have to be simply a place for “answers.”. There’s also the voice tools that enhance the interaction while simultaneously turning your words into text for later use. There’s a lot of possibilities here in helping students get outside their own head that is different from the white screen of a document program.

And then, of course, you know, the final tip of always asking it to improve your prompt before you ask the prompt that you want it to use. Again, I've talked about this elsewhere, but it's a really insightful way to both get a better prompt and start to learn to understand how generative AI works on the underside and what it looks for in prompts.

Those are my four moves that I’ve found useful when explaining and supporting faculty in talks and workshops in wrapping their heads around generative AI. Again, these aren't anything new. You could see within each of these, Still, I just thought having done many of these workshops and talks, that it would be a useful thing for folks to take a look at and benefit from.

AI+Edu=Simplified by Lance Eaton is licensed under Attribution-ShareAlike 4.0 International

I'd like to suggest adding a Step 2.5. It is important for faculty to reflect on what matters in the narrative of student learning for any given assignment to know which tasks must be conserved according to traditional methods. In other words, conduct a triage of instruction to determine what *cannot* have an AI component to it. Once the "space" has been established for what *can* have an AI component, then you can discuss what is possible in that space.

Second, it is useful for faculty to think incrementally about the use of AI so that it does not feel so expansive. I suggest starting with ways to streamline certain tasks at a relatively low stakes level before considering transformative approaches that leap too far, too fast. (Think SAMR model).

This is excellent. Really useful stuff. Do you have anything more on the icebreakers you used here?