A Framework for Using AI with OER

Helping to find a pathway to OER and AI

I’m a little behind on posting this one. I have a talk in June about OER & AI. It was focused on a program in Massachusetts that sought to support faculty developing OER content as part of a larger grant to also create synergy among faculty across the community college, state university, and UMASS institutions in Massachusetts.

The request was to provide some context about OER and AI, along with guidance on how to use AI to inform the development of OER effectively. As is often the case, the range was wide from folks completely new to OER to folks completely new to AI to folks completely new to both and folks with strong familiarity with both.

I’m not going to share the entire text as I think some of it dovetails with other things I’ve shared here already (You can check out the slides as well as the resource document). But I do want to hone in on the piece that was newest for me to think about and share out. I think it might be the most useful part of the talk.

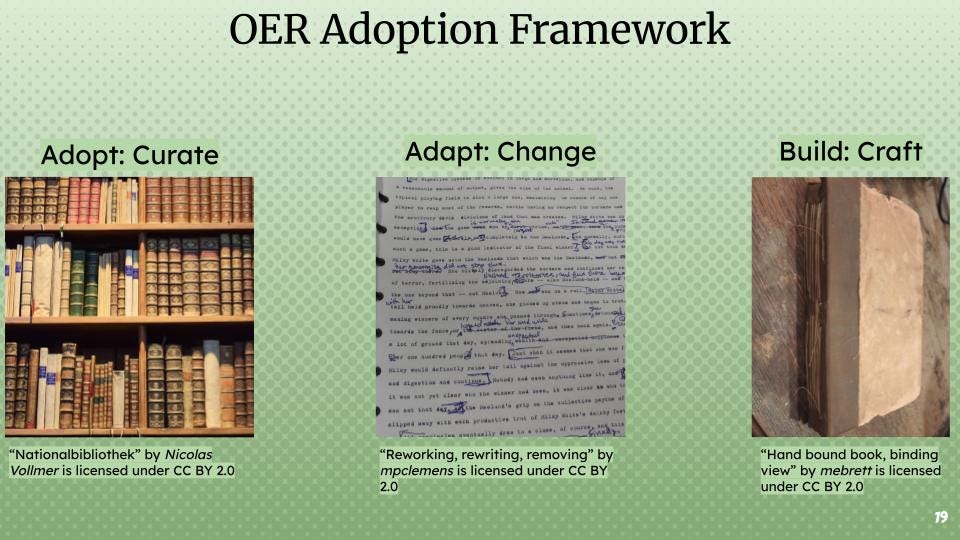

The OER Adoption Framework

I know that the 5Rs is one of the more popular ways of discussing OER, but when working with folks over the years, I’ve often found the Adopt, Adapt, Build model as the most ideal approach. It provides very clear inroads to framing one’s relationship with OER. I know that I first heard it from Kim Thanos, but I am not sure exactly where it came from. (If you know exactly where it came from, if it isn’t Kim, share the source, I’ll happily update this part!)

Under this framework, engagement with OER is presented as three possible approaches:

Adopt: Find an OER and plop it straight into your course.

Adapt: Find an OER, make edits and adjustments for it to fit into your course (and ideally, make it available for others to benefit from).

Build: Create the OER you need and make it available for others.

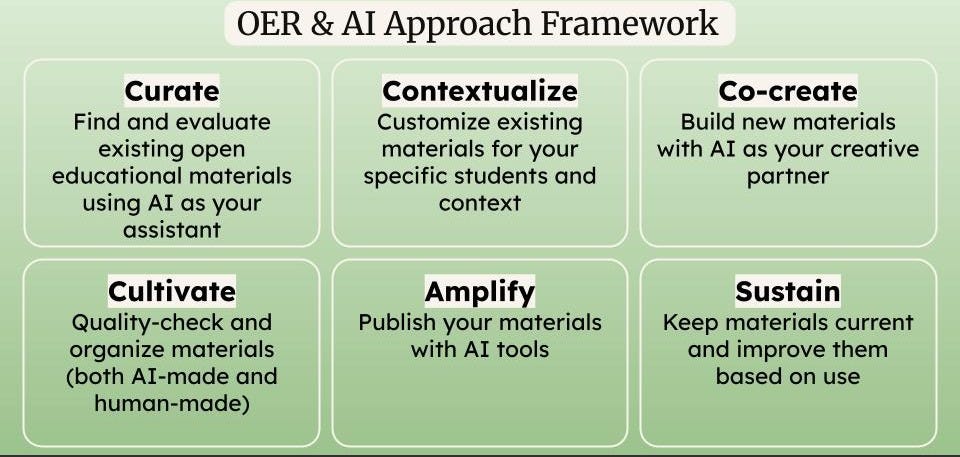

The OER & AI Adoption Framework

Because that’s my default thinking when introducing OER, I wanted to think about how I might use or build upon that to create a new framework for finding ways for folks to think about how to navigate into or determine their approach with OER and AI.

So after some playing, thinking, and talking with folks, I came up with the following. I don’t think it’s comprehensive; I don’t think the categories are mutually exclusive; I do think that it’s a digestible way to help someone step into AI and OER if they are trying to find a way in without being overwhelmed.

Here is what I came up with:

Curate. AI can assist in finding and evaluating open educational resources by searching across multiple OER libraries, translating materials, and identifying what’s missing. Educators define what they need, review AI’s findings, select the best matches, and note gaps to be filled.

Contextualize. AI can adapt existing resources to fit a specific course or student population by adjusting reading levels, localizing examples, generating multiple formats, and adding accessibility features. Faculty specify the context, choose adaptations, review changes, and fine-tune results.

Co-create. When new materials are needed, AI can generate first drafts, suggest fresh approaches, create practice problems, or design interactive elements. Educators provide expertise, guide prompts, refine drafts, and ensure accuracy.

Cultivate. AI can help maintain quality by pulling sources for verification, identifying biases, comparing similar resources, and flagging outdated content. Faculty set standards, make final decisions, add expert insights, and organize collections.

Amplify. To share resources, AI can handle formatting across platforms, generate metadata, and suggest dissemination venues. Educators determine licensing, write transparent AI-use statements, choose where to share, and upload to repositories.

Sustain. AI can review for needed updates, analyze usage data, suggest improvements, and incorporate feedback. Faculty review these suggestions, enact updates, guide improvements, and maintain overall quality.

Note that this is just the framework for how one might find their way into AI and OER. None of this speaks to the other parts of the process, which include discussions of AI limitations and problems (bias, environment, data privacy etc), issues about copyright (both where the models draw from but also the copyright status of AI—Slide 21 of the presentation includes some framing for that), labor and compensation around OER work, and the like. Those still need to be part of the conversation, but for folks who are looking for a path where these might fit together, this is a good place to start.

But I do think it is a good starting point for engaging with faculty and others who might be looking at how GenAI might help them with creating or reimagining learning materials or activities.

Some of the reason I’m thinking about this in the moment and would love feedback, especially if you know other models like this is that I’m running around session of Applying Generative AI to Open Educational Resources for EDUCAUSE soon and would love to add other work that I might have missed along the way in this space.

The Update Space

Upcoming Sightings & Shenanigans

EDUCAUSE Learning Lab: Applying Generative AI to Open Educational Resources, September 2025

AI and the Liberal Arts Symposium, Connecticut College. October 17-19, 2025

EDUCAUSE Online Program: Teaching with AI. Virtual. Facilitating sessions: ongoing

Recently Recorded Panels, Talks, & Publications

Intentional Teaching Podcast with Derek Bruff (August 2025). Episode 73: Study Hall with Lance Eaton, Michelle D. Miller, and David Nelson.

Dissertation: Elbow Patches To Eye Patches: A Phenomenographic Study Of Scholarly Practices, Research Literature Access, And Academic Piracy

“In the Room Where It Happens: Generative AI Policy Creation in Higher Education,” co-authored with Esther Brandon, Dana Gavin, and Allison Papini. EDUCAUSE Review (May 2025)

“Does AI have a copyright problem?” in LSE Impact Blog (May 2025).

“Growing Orchids Amid Dandelions” in Inside Higher Ed, co-authored with JT Torres & Deborah Kronenberg (April 2025).

AI Policy Resources

AI Syllabi Policy Repository: 180+ policies (always looking for more- submit your AI syllabus policy here)

AI Institutional Policy Repository: 17 policies (always looking for more- submit your AI syllabus policy here)

Finally, if you are doing interesting things with AI in the teaching and learning space, particularly for higher education, consider being interviewed for this Substack or even contributing. Complete this form and I’ll get back to you soon!

AI+Edu=Simplified by Lance Eaton is licensed under Attribution-ShareAlike 4.0 International

Hey Lance, recently I was collaborating with a computer security instructor, who mentioned that in his field, there are just no good resources for students on key topics, for his audience of future software engineers and need for recency of content. He mentioned that his recorded lectures, however, contain much of what he feels could be a source of that content. I suggested the possibility of creating his own resources (readings) using AI to create those readings, using his lectures as sources. He uses Perusall as well. We haven't spoken again on this, but I thought you might find this case interesting and not an ucommon need. If you know of anyone who has done this, please let me know! - John from Brandeis

This seems like a useful framework. Now, as a thought experiment, consider what teaching and learning can look like in the 21st century through the lenses of agency, possibility, equity, and technology in a K-12 environment that does not have internet access. What might be learned that's useful for Higher Ed?