AI & The Copyright & Plagiarism Dilemma

A not-so-hot take on the copyright and plagiarism conversation with AI

Over the last year and in particular, as the lawsuits against OpenAI and other generative AI tools have emerged, I’ve gotten asked for formal and informal opinions about how I make sense of it or what do I think of it. More recently, a conversation on Mastodon and LinkedIn, had me writing enough about it that I figured a post here might be helpful for me as well as others to sort some of this out. And given the recent rulings that have dismissed key pieces of the lawsuit, it’s also timely.

A Few Caveats

I’m not a lawyer nor judge. So, much of what follows is my own sense-making from the sidelines after having been involved in amateur sportsball at best.

In general, I have quite the biased view of copyright (you’ll see below) that brightly colors my take on it—so how much of this is ultimately helpful in thinking through is questionable at best.

More specifically, my dissertation focuses on how broken copyright is in academia leading scholars to engage in academic piracy. So while I certainly understand and know copyright is a bigger societal consideration, a lot of my thinking has specifically focused about what it means that so much research is closed off because of copyright.

The (Mostly) Straightforward Answer

In a legal sense, I think OpenAI and other AI tools will likely win these lawsuits because of fair use and the idea of transformative work. The US Copyright Office has this to say about transformative uses:

“Additionally, “transformative” uses are more likely to be considered fair. Transformative uses are those that add something new, with a further purpose or different character, and do not substitute for the original use of the work.”

What these AI tools are doing can be understood entirely transformative. It’s using works in a way that is something entirely new in several ways.

First, it is not focused on the actual sentences and paragraphs as they materially exist. The focus of what large language models do are focused on the probabilistic relationships among words (or vectors within images). And they create different works by mathematically mapping those patterns based upon how other people interact with the technology (i.e. prompts). This can be clearly explained in literal terms as entirely different from how humans would “copy” a work. We may “well, that’s what humans do” but that’s metaphorically how we describe it but not what actually happens in our brains. So while we may come up with metaphors, you’ll see below, I’m weary of metaphors in this space given their history of poorly framing our ideas around words, copyright, and ideas.

Second, no individual work is essential to the large-language model, which in some ways means it’s hard to argue that there is a copyright violation happening. Copyright violation happens in relation to a particular work. It’s pretty clear that if you remove just one work from the large language model, it will have an infinitely small impact on the whole.

So why is that important? Because part of the claim is that AI can regenerate works from its training data. Not really. It’s reproducing probabilistic words in relation to the context provided to solicit that regenerative work. And my guess is that it can be done, even when the work isn’t in the data set. Yes, a generative AI tool with a large enough data set, probably can accurately recreate works that are NOT in its data set.

How? Take The World and Me by Ta-Nehisi Coates (who is one of the plaintiffs in one of the suits). I would bet given the amount of writing by Coates and about Coates on the Internet (and in the data set), that if given the right prompt (“If Ta-Nehisi Coates were writing a book to his son that explores what it means to be growing up Black in a racist society, what would the open pages look like”—more context needed but just sharing the gist)—I would be that generative AI could strikingly get close to those opening pages.

And that’s tranformative— using the collective whole of writing and even in the absence of a given work, to be able to closely if not perfectly recreate it.

Third, the above not only adds "something new" it also is a different character in terms of how it is created. It's not a given or guarantee that one CAN recreate a person's works to a degree that makes it a substitute for the original use of the work. One can never be certain that it IS a copy unless one has also obtained a copy with which to verify. I can only know if AI has perfectly recreated Chapter 1 of Coates' book if I have chapter 1 to confirm.

But Is It Still Plagiarism?

In the section above, I focused primarily on the legal definition, but of course, that puts aside the question of some of the moral concerns around what generative AI has done by “copying” everyone’s work without permission or reference and using it to create new works. That moral claim is grounded in the sense of copyright of intellectual works being “owned” and that we have a moral obligation to give credit for our sources.

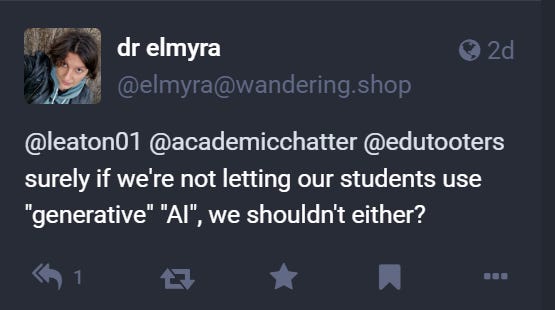

In the absence of giving credit, we lay claim that what is happening is plagiarism. And that term is being used a lot when talking about what generative AI is doing. For instance, I was recently engaged by someone on Mastodon when I was sharing some thoughts about the copyright issue in relation to an article I had just read ("How Can Neural Nets Recreated Exact Quotes If They Don't Store Text"). One user responded:

My repsonse was "I'm generally not in the camp of not letting students use it..and I'm generally in the camp of parity--if students can/can't, then faculty/staff should/shouldn't." It wasn't quite clear what vantage point she was taking but if it was the idea of balance, I was all here for that (having previously written about transparent approaches in the LMS for students). She followed that response with:

I reply that “that it's nuanced & usually requires more thoughtful conversations about what happened & why.” Again, I’m not quite sure what her take is and what she’s trying to ask so I don’t want to elaborate but I’m definitely from the camp that thinks we have turned plagiarism into a bigger monster than it needs to be (and I say this as someone who at one point was so proud to “catch” students committing this “highest of academic crimes”—good lord!).

In truth, we often see that the less power or agency we experience (in our classrooms, our roles, our institutions, and in the world at large), we squeeze tighter to the things that we think are the preserving vestiges of a (utopian) time when things weren’t as hard. In academia, I think that’s clinging to things like plagiarism. Which is not to say this is something to never be addressed but something that has antiquated and complicated elements to it (e.g. citation formats and how those can be lorded over a student).

It’s also at this point that I’m starting to wonder if there’s a point to the questions or not. She follows with:

I explained,

“I would also say it's a nuance consideration because given how LLM's work, saying it's plagiarism is not actually a 1:1 comparison to individual plagiarism and moves us more in the metaphorical space.

Plagiarism is being used in a metaphorical sense--the same way "piracy" is used metaphorically when talk about when people make digital copies of music, videos, or books.

In both cases, we take something new, given it an old name, and therefore confine the discourse to something we're familiar with.

For me, I don't buy it (pun intended?) in both instances. That doesn't mean I don't have issues with AI companies for a variety of things related to AI but plagiarism is not on that list. “

I think there are a two points happening worth noting.

First, AI should be referencing source material when they significantly rely on a given set of texts and some of them are starting to do so. But we also have to determine what we mean as significant. We have to delineate from content (ideas?) and structure (presentation of ideas) but that can be incredibly hard to do.

What do I mean by content and structure? Because the outputs of large-language models are created through studying the structure of words within its data, how the AI shapes a collection of coherent words (i.e. content/ideas) is intrinsically linked to the totalality of that data set—not an individual work. And what it is doing with a specific source is identifying that structural relation to specific words and mathematically calculating (rightly or wrongly) what words are relevant or useful in responding to a prompt.

Again, this is can be described as metaphorically similar to what we do when we draw upon another’s work when we’re thinking and creating sentences and paragraphs but that is metaphor and not literal. When we talk about copying or drawing upon a source, we’re often referring to the gist or central tenet of a source and a thinking process that is focused more holistically on the work. AI isn’t holistically looking at the idea but the chunks of letters. In doing so, there is influence going on but it is not the same.

Second, by using “plagiarism” to describe the way AI draws upon sources does not feel like the right term. And I see the use of the term “plagiarism” be set up to be exactly like the way we use the term “piracy” when discussing digital files. It’s an inaccurate metaphor that traps us into and limits our ways of thinking about something new and different.

Claiming “plagiarism” so early in the discourse leaves us with little other way to discuss the actual things that are happening. As a result, we’re not going to be able to effectively navigate downstream effects.

I think about this a lot with copyright and “piracy.” Our fixation on piracy may have helped some artists and creators. More often, it has overwhelmingly helped IP companies (e.g. Disney, Sony, NewsCorp) who get to own properties for 70 years after the death of the creators. This has done significant harm to how we can explore and incorporate past ideas into the present. In fact, I spoke about this a few years ago when giving the Liberal Arts Lecture at North Shore Community College: Vampires Get You Famous, But the Hulk Will Get You Sued.

Where this has significantly harmed society is how much academic scholarship—literature that is meant to be given away and accessible—is now locked behind paywalls. This is the crux of my dissertation: how we’ve created a system where scholars need to rely on pirate platforms to access research literature in order to be scholars.

So naming what is actually happening can matter significantly and limit our scope of understanding and possibility.

Concluding Thoughts

One thing I worry about is that if these AI companies lose these lawsuits, it will further weaken fair use—and we should all be concerned about that. That will have implications for scholars, creators, and the culture at large. In truth, I have hopes that if nothing else, this might cause us to holistically rethink copyright and how it currently exists because it is not suited for the digital age and continues to hamper creativity, conversation with past culture, and how we make knowledge accessible.

The thing is that I’m not overly excited about the AI companies winning and at the same time, the entities suing them aren’t exactly honest brokers and protectors of creativity in ways that are empowering and many of themselves have benefitted from fair use or outright abuse of copyright and capitalistic practices. So it’s not that I’m anticipating a utopian world where ideas and knowledge can be more easily access—I just don’t think there has been enough critical consideration about how shitty copyright and plagiarism are and their application to AI doesn’t feel like it will help that.

AI+Edu=Simplified by Lance Eaton is licensed under Attribution-ShareAlike 4.0 International

Really nuanced take here...I like that you are open to alternative considerations and attentive to the metaphors we are adopting to make sense of generative AI. My takeaway from this piece is that we needed to update our laws and our language about intellectual property before generative AI got so exciting and now, as we start using these new "cultural technologies" it will become imperative, scarily so.