AI Priorities and the People’s Problem

What your peers’ responses reveal about confidence, culture, and change in the age of AI.

Introduction

A lot of my work here has focused on ideas, practices, policies, and people in the higher educational space. But a question that has come up, even since the early days of GenAI has is how do we actually help people move through all this change?

That’s where my partner, Christine Eaton, comes in. She’s a certified change management practitioner with deep expertise in human-centered operational and systems change. Together, we launched Anchor Insights Consulting in the last year. We help colleges and universities, especially those with fewer resources, navigate both the sense-making and the day-to-day implementation of GenAI. Our focus is on building the confidence and capability leaders need to keep finding their way in this evolving landscape.

This piece is us sharing back our findings from the brief survey we did last month, as well as integrating it with some of the lessons we’ve learned from our clients. Finally, it’s a sneak peek at the programming for leaders throughout higher ed institutions we’ll be offering in the spring to support this work.

— Lance

What We Asked & What You Told Us

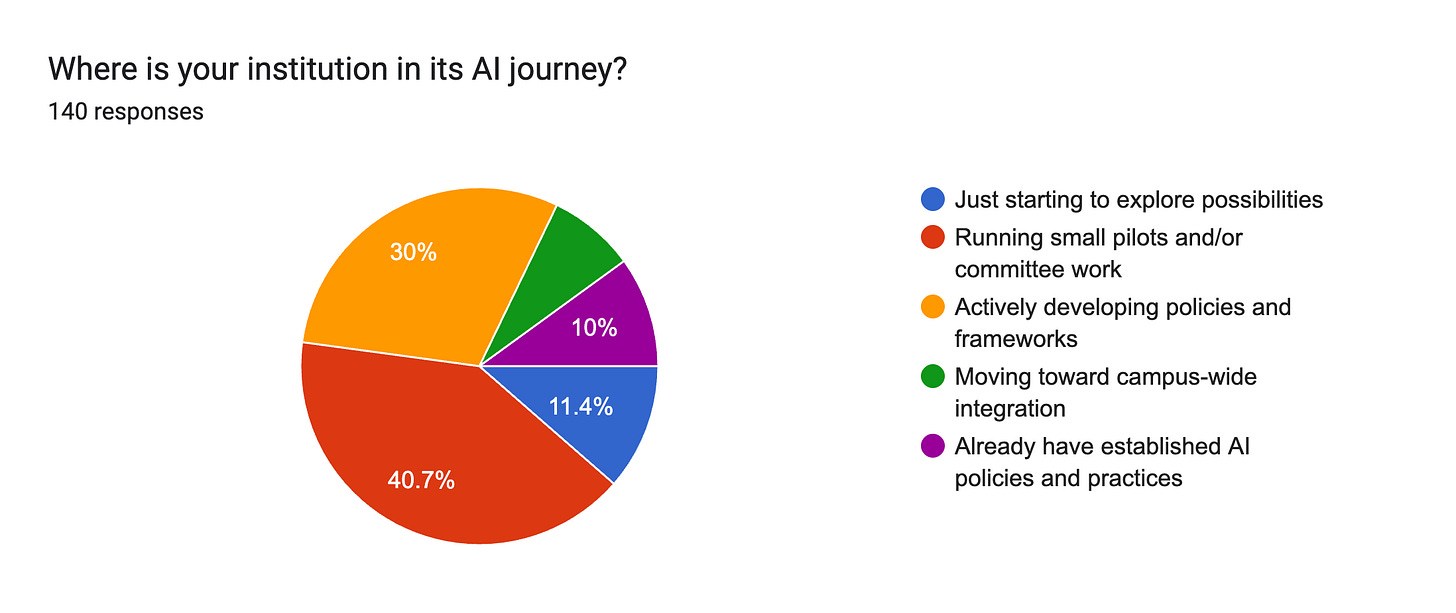

To be clear, our survey wasn’t rigorous. It was people who read this Substack and others in higher ed to whom I reached out and shared the survey. Around 140 folks completed it. In some ways, we don’t want to overstate the results. Still, much of what we found resonates with my experience of visiting dozens of colleges over the last 2.5 years and the work we’ve done with our clients. So, all that about taking things with a grain of salt, and let’s dig into it.

We asked, “As institutions are looking to figure out what to prioritize around AI, what are the three main issues that your campus would benefit from learning more about?”. The three most selected responses were: cultural change, faculty and student guidance, and policy and governance. In other words, people are thinking about AI through the lens of how humans adapt to it.

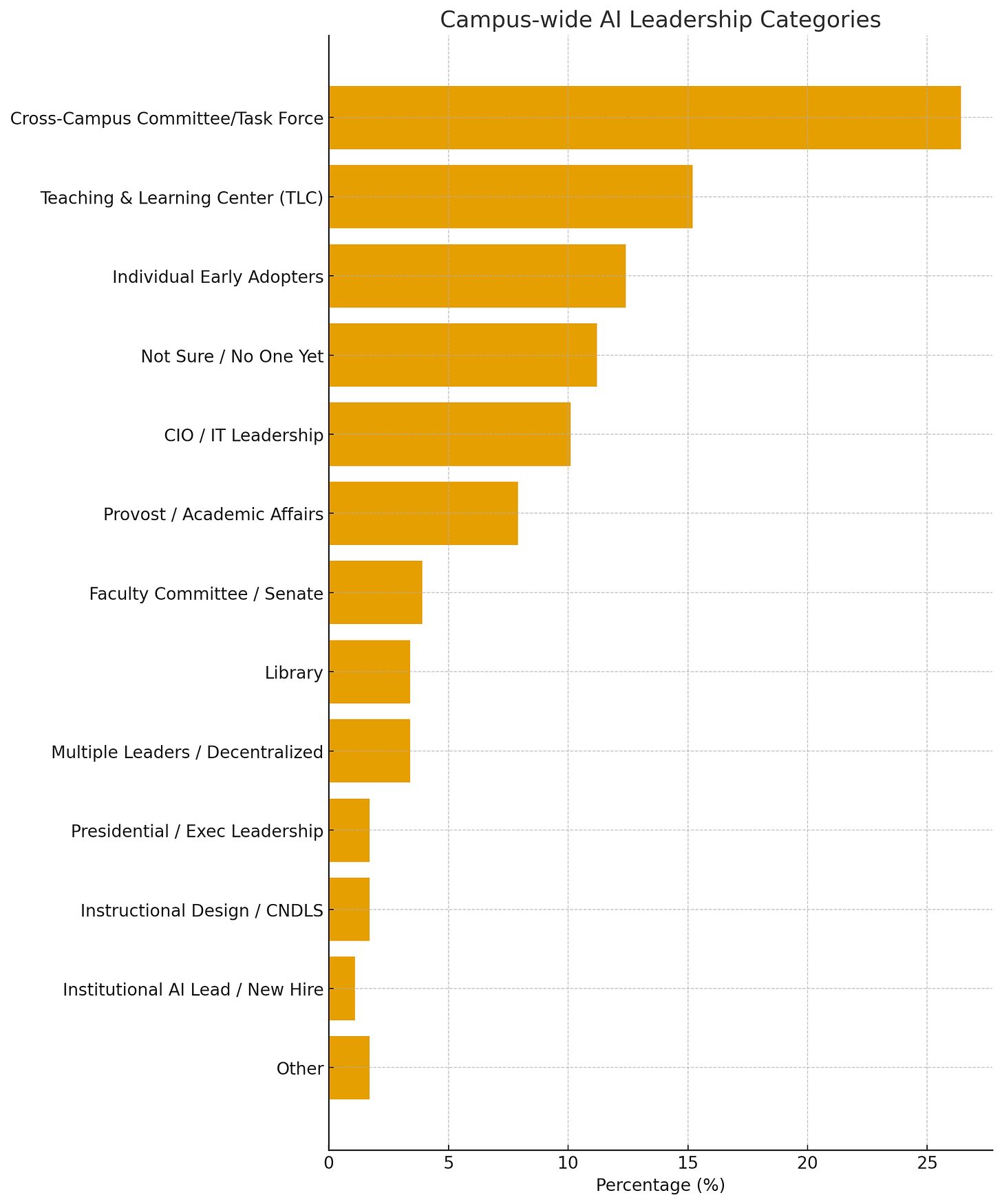

When we asked who’s leading the AI conversation on campuses, the responses painted a familiar picture of both enthusiasm and fragmentation. The most common answer was some version of a cross-campus task force: a group bringing together IT, Academic Affairs, teaching centers, and faculty governance, but often without a clear point of coordination. Teaching and Learning Centers appeared frequently as the practical hub where ideas turn into workshops or classroom experiments, while individual early adopters continue to push the boundaries on their own. Provosts and CIOs are increasingly stepping in, but their efforts tend to follow rather than drive the work. Libraries, though less often mentioned, stand out as critical yet under-recognized partners in this space.

Overall, the results suggest that higher ed is still in an early phase of distributed leadership with lots of people talking, experimenting, and coordinating loosely, but few institutions with an integrated, sustained strategy. Many are exploring the elephant, but there’s not a strategic exploration of the elephant. That’s healthy curiosity without coordination.

The Questions for Leadership

There are a lot of insights and considerations that came with the final question, “What’s one question you wish your leadership team could answer about AI?”. As we read through open responses, we saw variation and commonality. A range of things leadership hasn’t fully answered yet. Where do we even begin? How do we balance innovation with accountability? How do we make sure AI helps every discipline, not just the tech-savvy ones?

Beneath those questions is something deeper: a call for confidence. Confidence that faculty can teach with AI ethically and effectively. Confidence that students can use it wisely. Confidence that institutions can lead responsibly in uncharted waters. What people are really asking is, “Help us feel steady as we navigate what’s next.”

Digging deeper into those themes will be an upcoming post. For now, it’s worth recognizing how many institutions are struggling to find their way and that there is a lot of common ground to build from.

In their global study on AI adoption, Prosci reported that 43 percent of organizations said their biggest hurdle wasn’t technology, but the learning curve. Another 38 percent cited user proficiency as the main barrier. Confidence is part of the learning process; having the opportunity to experiment, fail, and iterate is what people need to gain confidence. So it makes sense that what surfaced much in the survey is about how people feel about using these tools and wanting more from leadership around understanding that.

Building a Pathway

When we stepped back, what emerged were three clear ideas for workshops and a pathway for leading AI with clarity and care for leadership in and across an institution. The forthcoming series (Navigating AI in Higher Ed) reflects three layers of leadership that build on one another.

The first is Leading the Human Side of AI. Leadership begins with culture by naming uncertainty, modeling curiosity, and creating space for honest dialogue. Some institutions are already doing this work to lean into the uncertainty and facilitate conversations through the AI milieu. Acknowledging the confusion and that the path is challenging in itself will build trust. And trust beats tools every time. So this portion of the programming looks at…

Next is Guiding AI Implementation Across Campus. Through building shared language, practices, and experiences with AI, leaders can support the various constituents. Building upon the trust from part 1, this session helps leaders across the institution to work with colleagues to test out and capture what is working, along with the means of implementation, as more applications of AI become apparent.

Finally, Governing AI Wisely. Governance is often framed as stifling innovation, yet it serves as an important anchor; one particularly needed in higher ed, where our impact is not just on our students but on our communities and staff. This session looks to build trusting mechanisms within governance to avoid control and allow for tempered innovation.

These three layers create the conditions for better trust and confidence, and that makes room for healthy AI adoption. Much of this is focused on the fact that transformation needs to start with a conversation and a willingness to explore together before we can get into tools or policy considerations.

Still Curious

Resistance? Normal.

Fatigue? Understandable.

But curiosity can be contagious, especially when modeled by leaders.

So this spring, we’ll explore these insights with leaders positioned throughout higher ed institutions. Each session stands on its own, so you can join one, two, or all three depending on your goals and schedule. Together, they form a pathway for leading AI with clarity, confidence, and care.

February: Leading the Human Side of AI

March: Guiding AI Literacy Across Campus

April: Governing AI Wisely

We’ll share dates soon, but you can sign up here for early updates and to learn more, or pass this along to colleagues who might want to join you.

At the end of the day, AI doesn’t change institutions; people do. And we’re here to help you lead that change.

AI Disclosure

This article was edited with the assistance of generative AI tools for clarity and flow; all interpretation and final wording are our own.

The Update Space

Upcoming Sightings & Shenanigans

I’m co-presenting twice at the POD Network Annual Conference,

November 20-23. Pre-conference workshop (November 19) with Rebecca Darling: Minimum Viable Practices (MVPs): Crafting Sustainable Faculty Development.

Birds of a Feather Session with JT Torres: Orchids Among Dandelions: Nurturing a Healthy Future for Educational Development

Teaching in Stereo: How Open Education Gets Louder with AI, RIOS Institute. December 4, 2025.

EDUCAUSE Online Program: Teaching with AI. Virtual. Facilitating sessions: ongoing

Recently Recorded Panels, Talks, & Publications

David Bachman interviewed me on his Substack, Entropy Bonus (November).

The AI Diatribe with Jason Low (November): Episode 17: Can Universities Keep Pace With AI?

The Opposite of Cheating Podcast with Dr. Tricia Bertram Gallant (October 2025): Season 2, Episode 31.

The Learning Stack Podcast with Thomas Thompson (August 2025). “(i)nnovations, AI, Pirates, and Access”.

Intentional Teaching Podcast with Derek Bruff (August 2025). Episode 73: Study Hall with Lance Eaton, Michelle D. Miller, and David Nelson.

Dissertation: Elbow Patches To Eye Patches: A Phenomenographic Study Of Scholarly Practices, Research Literature Access, And Academic Piracy

“In the Room Where It Happens: Generative AI Policy Creation in Higher Education,” co-authored with Esther Brandon, Dana Gavin and Allison Papini. EDUCAUSE Review (May 2025)

“Does AI have a copyright problem?” in LSE Impact Blog (May 2025).

“Growing Orchids Amid Dandelions” in Inside Higher Ed, co-authored with JT Torres & Deborah Kronenberg (April 2025).

AI Policy Resources

AI Syllabi Policy Repository: 190+ policies (always looking for more- submit your AI syllabus policy here)

AI Institutional Policy Repository: 17 policies (always looking for more- submit your AI syllabus policy here)

Finally, if you are doing interesting things with AI in the teaching and learning space, particularly for higher education, consider being interviewed for this Substack or even contributing. Complete this form and I’ll get back to you soon!

AI+Edu=Simplified by Lance Eaton is licensed under Attribution-ShareAlike 4.0 International

Awesome read thanks for the info!