Getting to an AI Policy Part 2: A Moving Target

Congratulations, You Created a Policy…Now Change It!

In part 1 of this 2-part (for now) series, I shared some insights I’ve gathered over the last two years about the hesitancy and challenges higher education institutions are having with putting out an official policy about GenAI. In this piece, I’d like to offer up a framework to consider for navigating the bigger challenge around AI policy–how long will it actually last.

When Do You Update Your Policy

GenAI itself came with a bunch of new affordances that we’re all adapting and figuring out. Yet, every few days a new aspect, usage, output, etc seems to be announced by one of the big AI companies. Personally, I have trouble keeping track, following and discerning what’s substantive and what’s not, nevermind connecting it to higher ed institutions’ mission. So what follows is an exploration to how the changing landscape AI might be navigated within AI policies, task forces, or the entities and individuals at given institutions that have their finger on the pulse (ok, maybe it feels more like fingers on the faultline?).

Ideally (hopefully?) institutions have developed policies and guidelines (along with the tool selection, deployment of support, and strategy). But it’s a tricky balance. Get a policy that can work out as soon as possible but also, what happens when the ground shifts. Well, it’s obvious: update the policy. But what does that mean, how do you decide, and who decides?

Creating a policy probably (or hopefully) also came with communication about how it will be updated and possibly the frequency of updating (and maybe, just maybe, how to contribute to that updating process). But rather than just determining time to dictate updates, there might be a better mechanism for policies, committees, or task-forces to help determine if updates are needed.

Something I’ve been thinking a lot about over the last two years–something that feels different from previous technology that has impacted education–is that AI (GenAI and whatever comes next) does not feel fixed but emergent. What can be done with AI continues to change and new uses appear routinely. New affordances suggest new challenges and offer new opportunities. The most recent discussion about Operator indicates a tool that can actually do things on your screen–something that clearly has implications of asynchronous learning activities.

This emergent experience has me thinking about this question: when these changes appear, are they categorical change or a degree of change? When I think about degrees of change with a technology, it often means that you’re still working within the same set of parameters of the technology but something has changed your experience of the tool. A categorical change is often going to reflect a change in how you use the tool. For instance, when Word provided a more expansive ribbon of things you could do to a file. But a categorical change would be when Word introduced Dictation and I could add to documents by talking. That changed how I used Word (or other word document programs).

Two things to note. The first is that these are loose definitions that I’m providing here but I think the mechanism holds true and can be used for institutions to consider. And, of course, they’re going to be considered in more than one category. The second is that it’s just a false dichotomy; so many degrees of change likely result in category change. Still, it’s a useful fiction to play with to make sense and sort through changes that seem to come so fast

A Starting Rubric

A thing that I’ve learned over the last two years is that the policy is not just about the policy but perception. I suppose I already knew this but I’m seeing it more concretely these days. Having a policy matters because it communicates back to the students and the faculty that the institution has some attention and awareness of this. (Note: Guidelines can do the same thing, but “policy” carries some way). It’s helpful in other ways, of course, it provides clarity for students and faculty–boundaries in a place where the boundaries continue to blur. A policy indicates awareness but also commitment, so devising and clarifying some kind of mechanism to update and address the changing technology can more easily communicate how that commitment is ongoing.

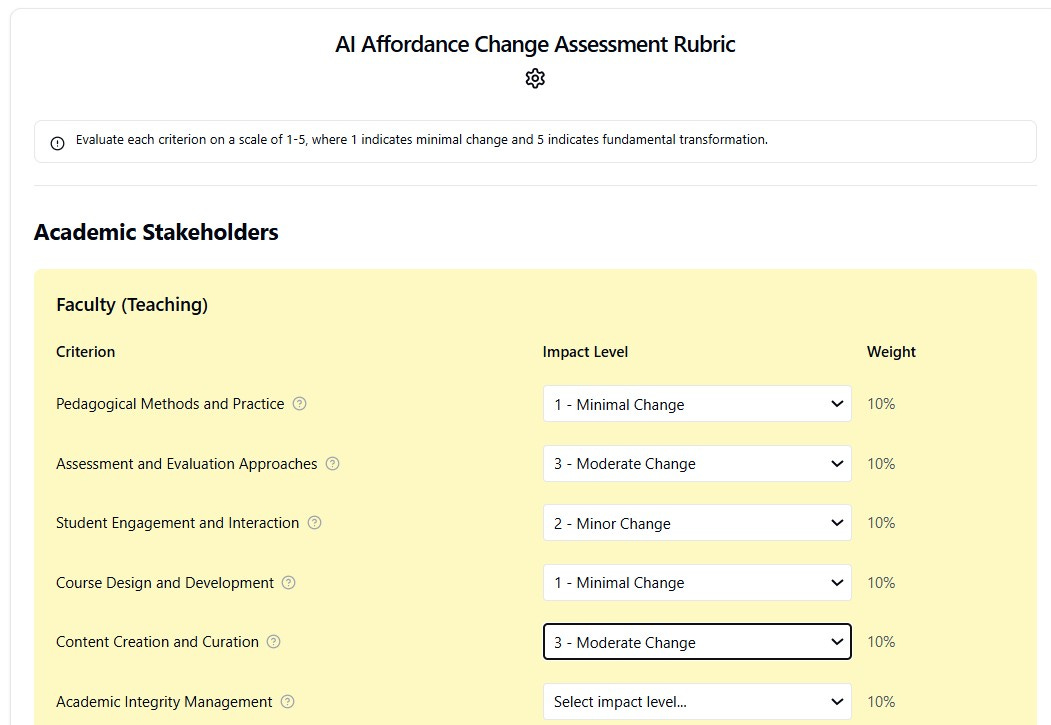

Using Claude, I created this interactive rubric as a proof of concept to consider. The idea would be to look at a new feature of AI tools and examine how deeply it changes each aspect. Again, the rubric could have more criteria for evaluation or less. It could have different weights and the like (in fact, if you have a Claude pro account, you can remix it, if you want). There could be different groupings. But creating this kind of mechanism that reflects the different concerns and considerations for an institution while also attributing weights and using it, if nothing else than for individual committee members to score and have a conversation about how and why they attached different values.

A mechanism like this could help committees make clearer judgments about when to update their policy and transparency about why they did or didn’t. Given the sometimes whiplash amount of changes that can happen, it can provide some stability of process and response. Without a mechanism for navigating the changes like this, even the best crafted policies risk becoming irrelevant with the continually evolving changes in conjunction with the expectations of institutional stakeholders.

As I said, it’s a working idea, but one that I think even as an exercise could be really helpful for institutions to use to get them thinking about how and why to update the policy.

But I’m curious to hear from y’all:

What methods of updating are your institutions considering?

How are you thinking about the scales of change with AI (e.g. the move from GenAI to Agentic AI) and its implications for teaching, learning, research, and work)?

What would be in your rubric if created one to guide this dynamic of degree or category of change?

Upcoming Events

I’ve got a couple more public events coming up that I figured I’d also share!

AI to Open Educational Resources: Enhancing Learning and Solving Ethical Challenges – March 12, 17, 20, 26 from 12pm-1:30pm ET. This mini course will be offered through EDUCAUSE and will include live sessions where we delve into the the relationship and challenges of GenAI and OER.

This is a 2-week asynchronous program from EDUCAUSE that offers synchronous drop-in sessions. This program is either facilitated by myself or Judy Lewandowski. They’re really good for folks still getting situated with GenAI. Below are the sessions that I’m facilitating:

NERCOMP Annual Conference: My colleague and friend, Antonia Levy and I are doing a facilitated discussion on Actionable Insights for Generative AI, Accessibility, and Open Educational Resources on April 01, 2025 from 11:30AM–12:15PM. If you’re attending the conference, we hope to see you!

AI+Edu=Simplified by Lance Eaton is licensed under Attribution-ShareAlike 4.0 International

A lot of school districts are rushing to draft standalone AI policies, but I had an insightful conversation with a forward thinking Superintendent, and she shared a different approach that resonated with me. Instead of having a dedicated AI policy, she believes AI should be embedded within existing policies—plagiarism, bullying, communication, and academic integrity—so that the policy remains relevant and enforceable, no matter how technology evolves.

Her argument makes sense: We don’t have “telephone policies”, we have “communication policies.” The same should apply to AI. If we build AI into our overall instructional, ethical, and behavioral guidelines, it ensures that policies remain future-proof and aren’t reactionary to specific tools.

The AI Affordance Change Assessment Rubric is quite useful, Lance. It goes to show that institutions will need good project managers to make good policy happen.