"...In the mode of 'we don't know'"

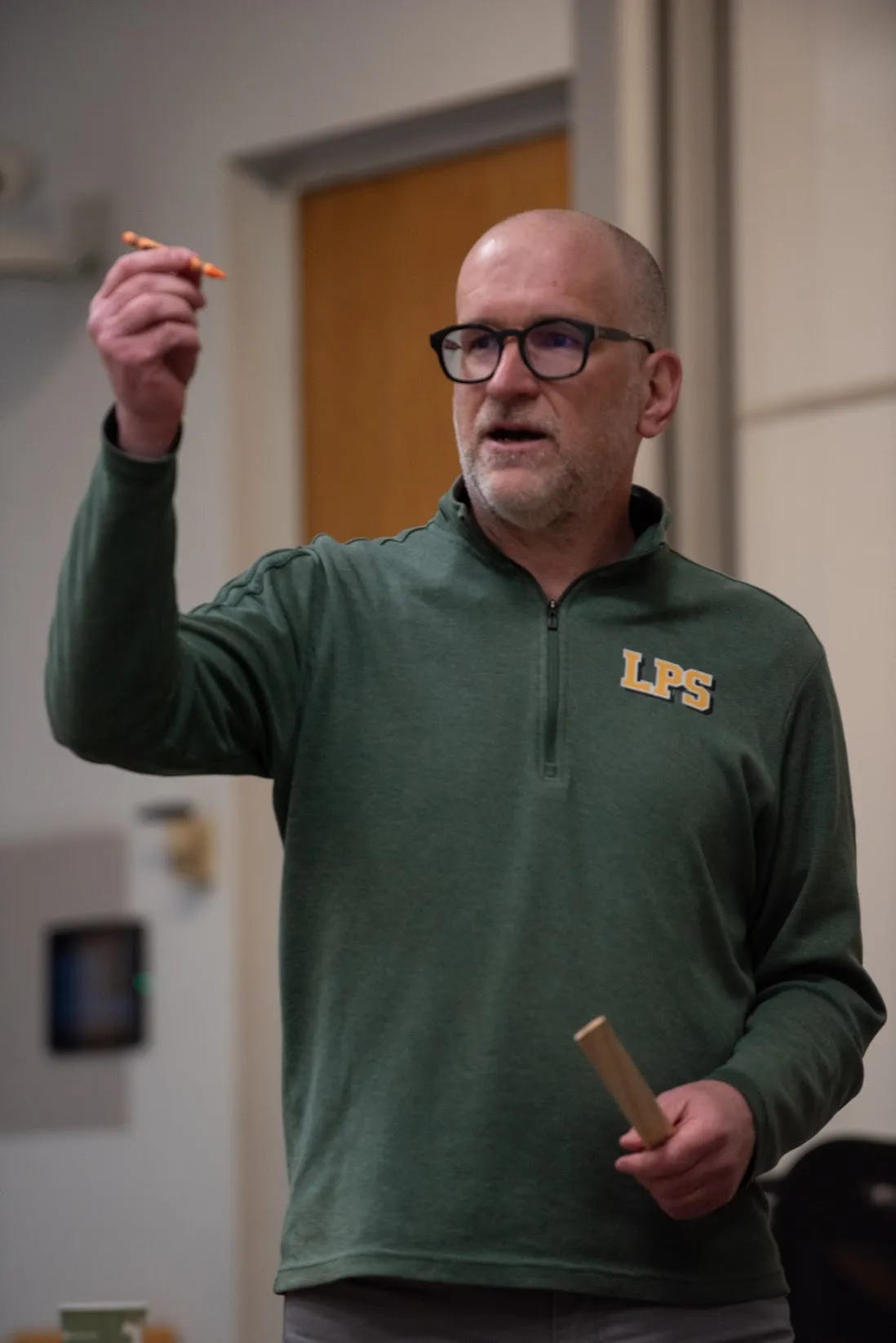

Part 1 of an interview with Rob Nelson

As part of my work in this space, I want to highlight some of the folks I’ve been in conversation with or learning from over the last few years as we navigate teaching and learning in the age of AI. As one of the first in this series, I’m happy to introduce Rob Nelson as the first guest. If you have thoughts about AI and education, particularly in the higher education space, consider being interviewed for this Substack.

Rob’s Introduction

Rob Nelson brings nearly two decades of experience from his time in the Office of the Provost at the University of Pennsylvania, where he led the implementation of major enterprise digital technologies, everything from Canvas as the campus-wide learning management system to Banner as the university’s student information system. He is also the author of the AI Log substack (which you should totally subscribe to!)

Part 1

Lance Eaton: Take us back to when you first start hearing about GenAI. What was that experience for you?

Rob Nelson: It was right on the release of ChatGPT and those early essays. I remember in particular the reading stuff in the Atlantic by teachers who were freaking out, saying this is the end of teaching and the end of high school and college writing. I go back again and again to John Warner’s ChatGPT Can't Kill Anything Worth Preserving, which let me think about this as an opportunity for positive change. That really informed my project, which is how to think about AI not just as a set of challenges or problems for educators, but as an opportunity to think about changing the institutional arrangements that structure our teaching and learning.

Lance Eaton: Tell me more about that. I hear within that engaging with and thinking about power and relationships differently.

Rob Nelson: It is forcing institutions to either double down on surveillance and control or give it up. It is entirely possible that these things are going to get way worse. But I'm hopeful that our response will bring a collective understanding of the real shortcomings of our approach, which was very much focused on assessing outcomes and not engaging with the processes we need to follow in order to teach and learn effectively. Institutions have been structured around outputs and outcomes while neglecting processes. When I think about what opportunities there are for changes, it's about really attending to process and making that part of our conversation around teaching, both at the institutional level but also in specific disciplines and classrooms.

Lance Eaton: When you talk about balancing or recognizing there's the potential for it to get a lot worse, and also this direction you're taking it of wanting to seize upon the possibility, can you imagine both happening? If so, what does that look like (with the crystal ball we all have right now)?

Rob Nelson: Where I find hope is that technological change doesn't happen in a vacuum. There's a social and political context for the introduction of any new technology. The time between AI coming into existence and it being diffused throughout our society is a very interesting moment. When I think about that context, there's a lot of destruction of institutions and defunding of programs and initiatives. That is creating challenges so that there will be diversity in how institutions respond and how state systems respond, and how large school districts respond. What I'm hoping is that diversity allows for true innovation and that's where we'll see pockets of “wow, this actually can be used in ways that are effective, and here are some new practices. We will find freedom to reinvent things.”

Lance Eaton: It reminds me of why we have 50 states in the US, and I've heard it described as we're basically 51 different experiments in democracy. I appreciate that particularly as I think about other models of education that are hierarchical to a degree in which the entire nation's school system can only make any changes to a course that requires at least two years going up and down the chain of command. Ok, let's step into the classroom and explore how do you start to engage with AI in your teaching?

Rob Nelson: I taught for the first time with a large language model tool last fall (2024) and used it primarily as a mechanism to introduce a new layer of peer review. My students were using this large language model tool called JeepyTA, which is something I had access to at Penn GSE where I teach. I added it the drafting process of what is a long history research paper that they were assigned. I would say it was successful. The students certainly thought so. It gave us a platform to talk about the potential educational value of generative AI and led me to think about what I'm going to do next. When I teach this fall and I'll be teaching a very different class that's specifically on AI and higher education

Lance Eaton: You said, they responded positively. What were some of the things you learned from them? What were some of the things that surprised you from engaging your student this way?

Rob Nelson: There was a real diversity of comfort and experience with generative AI among the students in the class. We spent a lot of time comparing notes, discussing who had used ChatGPT and who hadn't. ChatGPT was the primary tool that we talked about. Then the actual technologists who run JeepyTA came to class, and we talked with them about what the tool does and how it works. That combination of use and discussion with the toolmakers created a lot of insight. In terms of assessing the functionality or value, there was a little bit of a letdown. I was surprised by how–I won't say how well it went–but rather how smoothly it went. We just plugged right into the peer review process in a way that didn't create a lot of confusion or friction. I saw enough value there to think this probably worked. And again, the students reinforced that. But I wanted more critical analysis, and so this fall I’m setting up our use of LLM tools to create that.

Lance Eaton: I can imagine somebody reading about this, and the question they're going to wonder about is how do you know, or what level of certainty, or what ways/methods could you feel some degree of certainty that the students were using the AI tool appropriately? What were your feedback mechanisms for just getting a sense of that?

Rob Nelson: I teach in a structured, active in-class learning environment, and so what happens in class is right there and visible to me. I sit at the tables and interact with the students. The actual peer review exercise was that I printed out the student drafts and the JeepyTA comments that go with that draft. The draft and the comments were what the students worked with during our peer review workshop. So my confidence in how it is used is based on listening to the conversations and participating in some of those conversations. I saw the way that they were using it. I would say that JeepyTA added value in the sense that students really often have trouble getting past the sense that they're goal is to support their fellow students in a “good job” way. That's the kind of feedback that everybody starts with. It's often difficult for students to give more critical feedback. JeepyTA helped with that because it gave them critical language that they could use to say, “This is what JeepyTA said, and I see this here.".

Lance Eaton: What I hear is that what made this possible is being in the same physical class together, being able to disperse the materials out to the individual students, and all that. Could you imagine other iterations when you're in synchronous spaces like this space we’re in right now or asynchronous environments?

Rob Nelson: I think that's really hard. One of the things that I was enormously pleased to be able to do is to experiment with this in a physical classroom where we're all in the same environment learning together. It's hard for me to imagine going back to my teaching in the previous four years, which was all online, and think about how I would be able to do this. To some extent, the exercise could be very similar, but the fact that I would be distributing those sorts of documents digitally and we'd be working together digitally would create challenges for how we would understand and experience it.

Lance Eaton: In my own mind, I want to workshop the heck out of that to find different ways to translate it, but I digress. Ok, you brought the students in, you had them starting to use this tool, which for some, it was new. To what degree did you experience either students being anxious around using AI in writing or hesitation about this in any way?

Rob Nelson: The initial hesitation was around the idea that using it at all was cheating. I was the one to bring it up using LLMs and to offer this as an experiment. I did so in a way that tried to deflate anxieties by saying that I was encouraging them to use the tools, and all I would ask them to do is to come back into class and reflect on their use of the tools. Even beyond the use of JeepyTA in the classroom itself, they had permission to use ChatGPT or whatever tools they wanted in the drafting process. All I asked was that they bring back that experience and talk about it. We had some good class discussions. I would say the class as a whole was very worried about the idea that AI would replace the skill development that they were looking to get out of the class. There was an awareness of the downsides, and our discussion was informed by some of the more vocal students who were concerned about that.

Lance Eaton: What a great space to have developed as an educator where students are talking about their bigge,r broader concerns about what about the skills they're developing and not worried about the course itself.

Rob Nelson: I'm very lucky. The class I taught was actually a graduate class in the school of education for higher education master's and doctoral students. So they were a pretty self-aware group. When I teach this fall on How AI is Changing Higher Education, I’ll teach a section of my class for that audience, but I'm also teaching a section of the class for incoming undergraduates in the College of Arts and Sciences. I may not be so lucky with the undergraduates. I think I'll need to do some more work with them on deflating some of the anxiety around grading and credentialing and all the things that come with the undergraduate experience of figuring out college.

Lance Eaton: But I would imagine in your experience, in general, but more specifically, already having launched and started to play with this, that it ideally gets easier each time. I imagine it gives a little bit more confidence or comfortability with the likelihood that it's going to get messy.

Rob Nelson: It does. We're going to be using JeepyTA for a similar writing feedback mechanism in the class. The students are going to be asked to write blog posts. They may or may not make them public, but JeepyTA is going to be programmed in order to give them feedback on their blog writing as part of the peer review process. But I'm pushing my own comfort level. I haven't made a final decision, but right now, I'm planning to use Boodlebox as a technology that allows students to build an educational chatbot. One of the structured activities is going to be in groups. They will build a chatbot designed to be an educational tool or resource for people who want to learn about some aspect of AI. I'm really nervous about that, excited about it, and perfectly willing to have it blow up in my face, but the experience will be a chance to reflect on what a chatbot is and what its educational value might be.

Lance Eaton: I love that. That is letting them get their hands dirty. Drawing a little bigger broader picture as you're thinking about that activity and some of the things that you've been doing, how do you evaluate the broader impact of AI around students whether it's their writing development, their confidence, their learning, what are you seeing with your students and just as somebody who's deeply versed in this discussion?

Rob Nelson: One of the things I really valued about the class, and I think this again was me being lucky in the students who had enrolled, but they were perfectly willing to be vulnerable and unsure about their understanding of AI. That's something I try to cultivate and perform myself as a way of sort of modeling what I want for my students. But with the classes I'll teach this fall, I'm prepared for it not to go so well. There's just so much conflict and confusion around the educational value of these tools. What it means to use AI in terms of cheating and misconduct, but also in terms of replacing the writing and thinking? I still think we're very much in the mode of we don't know. That's allowing some of the louder voices to dominate the discourse, but I'm hopeful that as we work through these questions that there'll be more clarity. And that's certainly one of the things I'm hoping for out of the class.

Lance Eaton: For other educators who are in the space of having kept AI at arm’s length or have been hesitant for a variety of different reasons, what do you see what's the one small step they might take tomorrow or the next class to explore these tools a bit more pedagogically to start to think about a little more intentionally? What should they be thinking about, or how should they be stepping into AI's role in their courses?

Rob Nelson: I know this cuts against the grain of most people's habits when it comes to these kinds of things, but I believe the first step should be to ask your students about it. When I was preparing for the class that I taught last fall, I had coffee with former students and set up some scheduled Zoom calls to ask them what they thought I should do and get observations about what they were hearing and thinking about AI. That student perspective is missing from so many of our conversations. It's just a really crucial element of this whole figuring this out together.

Lance Eaton: Yes, I'm there 100%. Ask students, find out where they're struggling. I see a lot more of that happening now, and it's just powerful to see. I keep thinking about the world they're emerging into is so fundamentally different from the college we went to. On all these levels, we'll never understand. It reminds me of the New Yorker piece where the author, Hua Hsu, interviewed different students who were in a variety of ways using AI appropriately or inappropriately. There’s a line where he says, “...part of their effort went to editing out anything in their college experiences that felt extraneous. They were radically resourceful.” That sticks with me a lot because I don't think we understand the ways life is lived when you're an undergraduate in 2025. For most of us, it's been way too long since we were undergraduates ourselves.

Rob Nelson: Or high school students. One of the things I'm most excited about is teaching this first-year seminar with incoming students. One of the ways I'm hoping to deflate some of their anxiety is to start by talking with them about their high school experience. I’ll start with don't worry about college yet. Just tell me what it was like to be the first group of high school students who actually had access to ChatGPT. What was that like?

Lance Eaton: I love that as a starting question. For many of us, it’s just so hard to understand.

Join us back here for part 2 of the interview, where Rob will dive into some of the other changes in his career he is stepping into regarding AI.

The Update Space

Upcoming Sightings & Shenanigans

EDUCAUSE Online Program: Teaching with AI. Virtual. Facilitating sessions: ongoing

Public AI Summit, Virtual, Free from Data Nutrition Project & metaLAB at Harvard. August 13-14, 2025

AI and the Liberal Arts Symposium, Connecticut College. October 17-19, 2025

Recently Recorded Panels, Talks, & Publications

Dissertation: Elbow Patches To Eye Patches: A Phenomenographic Study Of Scholarly Practices, Research Literature Access, And Academic Piracy

“In the Room Where It Happens: Generative AI Policy Creation in Higher Education” co-authored with Esther Brandon, Dana Gavin and Allison Papini. EDUCAUSE Review (May 2025)

“Does AI have a copyright problem?” in LSE Impact Blog (May 2025).

“Growing Orchids Amid Dandelions” in Inside Higher Ed, co-authored with JT Torres & Deborah Kronenberg (April 2025).

Bristol Community College Professional Day. My talk on “DestAIbilizing or EnAIbling?“ is available to watch (February 2025).

OE Week Live! March 5 Open Exchange on AI with Jonathan Poritz (Independent Consultant in Open Education), Amy Collier and Tom Woodward (Middlebury College), Alegria Ribadeneira (Colorado State University - Pueblo) & Liza Long (College of Western Idaho)

Reclaim Hosting TV: Technology & Society: Generative AI with Autumm Caines

2024 Open Education Conference Recording (recently posted from October 2024): Openness As Attitude, Vulnerability as Practice: Finding Our Way With GenAI Maha Bali & Anna Mills

AI Policy Resources

AI Syllabi Policy Repository: 175+ policies (always looking for more- submit your AI syllabus policy here)

AI Institutional Policy Repository: 17 policies (always looking for more- submit your AI syllabus policy here)

AI+Edu=Simplified by Lance Eaton is licensed under Attribution-ShareAlike 4.0 International

Very interesting conversation! Thanks, Lance. Thanks, Rob