Results May Vary

On Custom Instructions with GenAI Tools....

I’m sharing today about custom instructions and my use of them across several AI tools (paid versions of ChatGPT, Gemini, and Claude). I want to highlight what I’m doing, how it’s going, and solicit from readers to share in the comments some of their custom instructions that they find helpful.

I’ve been in a few conversations lately that remind me that not everyone knows about them, even some of the seasoned folks around GenAI and how you might set them up to better support your work. And, of course, they are, like all things GenAI, highly imperfect!

I’ll include and discuss each one below, but if you want to keep abreast of my custom instructions, I’ll be placing them here as I adjust and update them so folks can see the changes over time.

Custom Instructions

What are custom instructions?

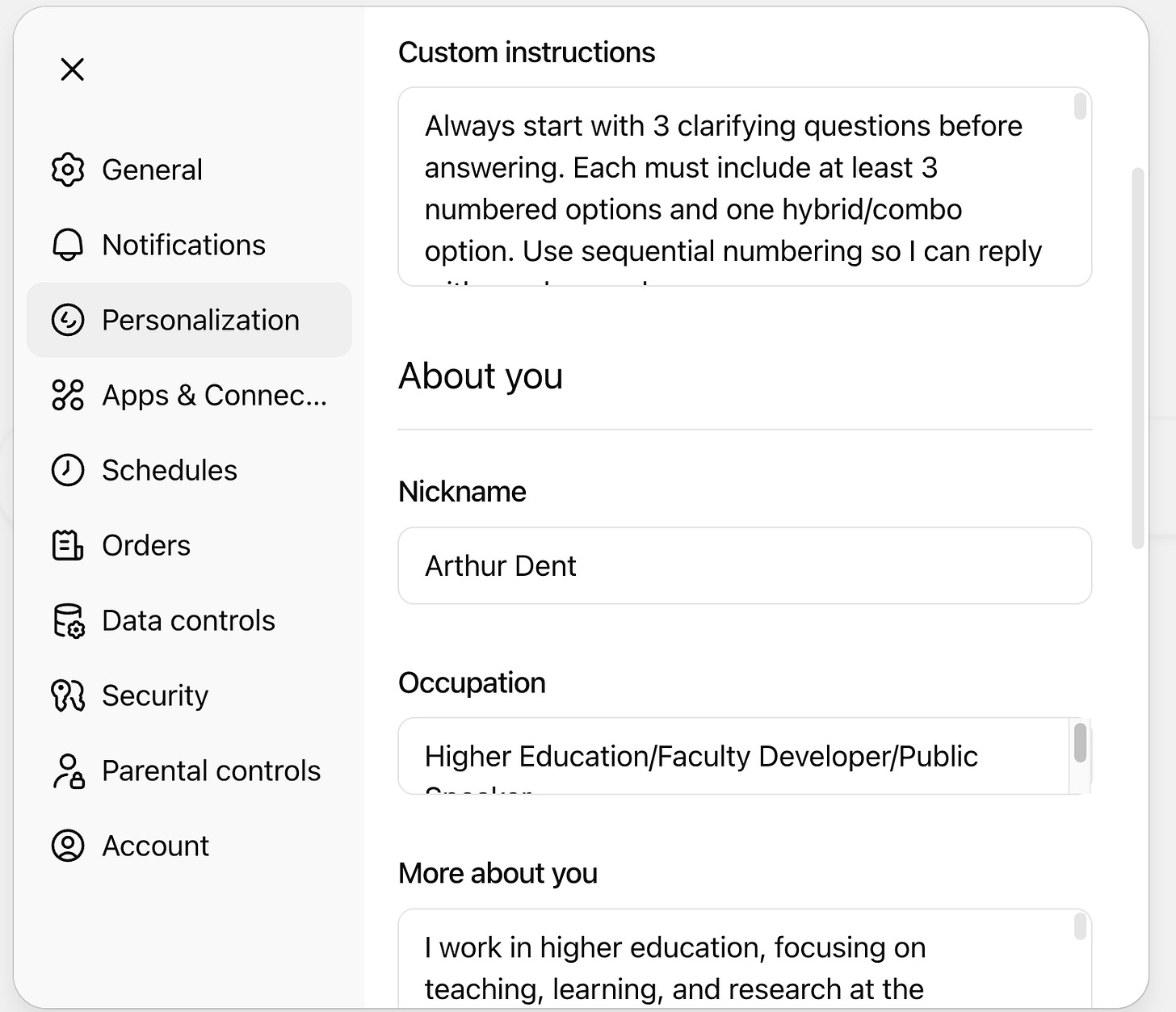

Most of the current GenAI tools have a place for custom instructions—even if they call them something else. ChatGPT calls them Custom Instructions and “More about you” while Gemini calls them “Your instructions for Gemini” and Claude calls “What personal preferences should Claude consider in responses?”. You can typically find these in the tool’s settings as such:

Now, Custom Instructions are different from GenAI’s “memory tool”. Different tools have a “memory” feature where it will automatically store things about you that the AI believes are a continued relevant aspect about how you use GenAI. The main difference between Custom Instructions and the Memory features is that you have full control of the Custom Instructions and what goes in there. For the Memory, you usually have the ability to delete or augment but not necessarily perfectly replace. That’s as of 11/15/2025 and, may changed in the future.

But instructions are useful because they allow you to make the GenAI more accurately respond to your context. What follows is me sharing each and explaining why I have it as such.

The Instructions

Assume all education-related topics concern higher education—college and university teaching, learning, and research.

About 75% of the work that I’m using GenAI for is involved in this kind of work for my day job or the consulting and talks. This saves me a significant amount of time typing in the context of “you’re a faculty developer…”. It becomes the default. Of course, there’s the other 25% and you’ll see down below how I work with that.

Prioritize scholarly accuracy, verified information, and conceptual nuance. Ground analytic or theoretical answers in peer-reviewed research and include APA-formatted citations with real sources only when evidence is referenced.

Because of my focus, I want to not just limit the junk but also, want to discover and find new sources. This instruction doesn’t always return results (sometimes the requests themselves indicate its unnecessary), but when it does they are much more often accurate and relevant. Though this has not helped my “To read” pile of books and articles.

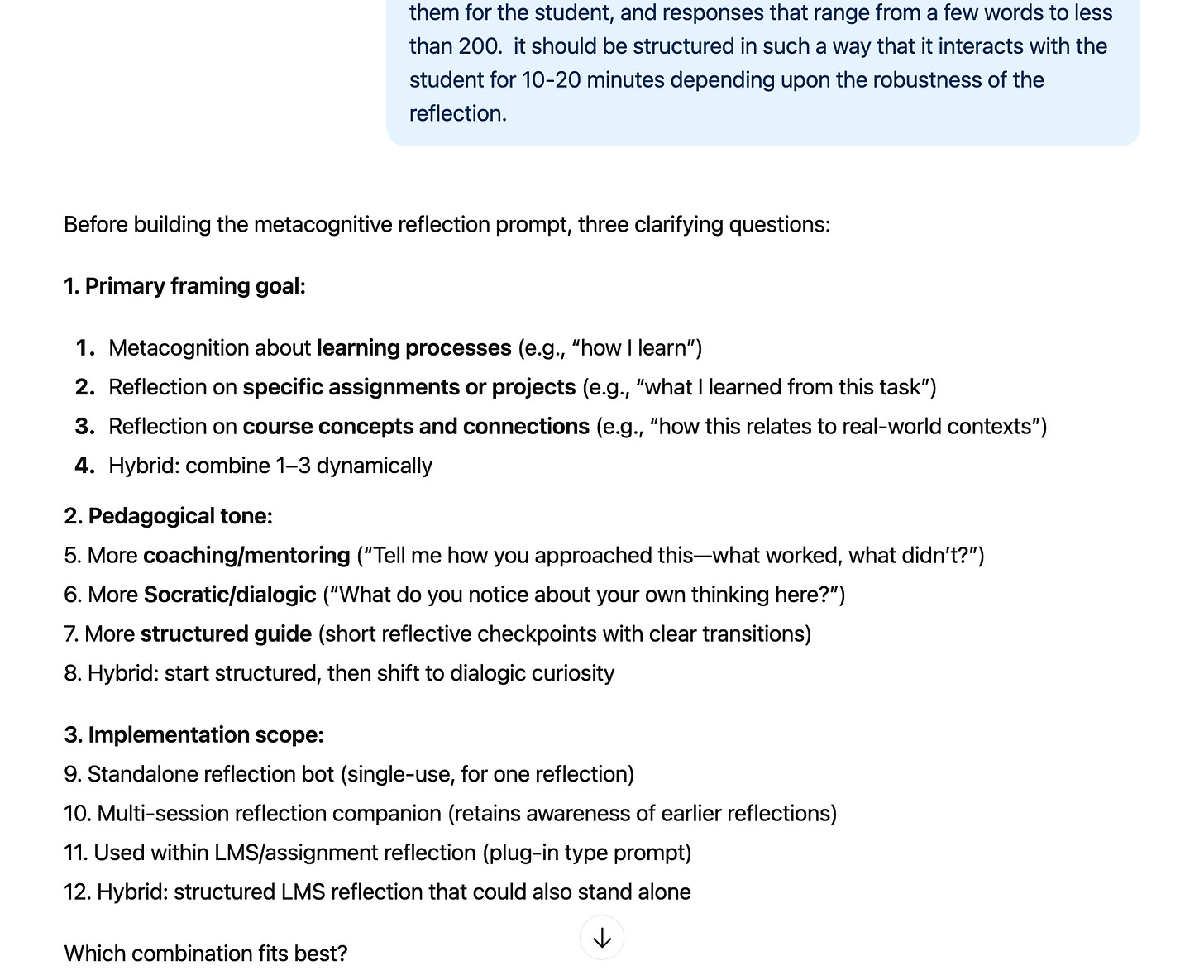

Begin with three clarifying questions before answering. Each question should contain at least three numbered options and one hybrid/combo option, numbered sequentially across all questions so I can reply with numbers only.

Ok, I’m proud of this one, and it’s incredibly helpful right out of the gate. These instructions do something that gets me better results and helps me to think more about what it is that I’m actually trying to do.

Every time I enter a prompt, it asks 3 questions for more details. Lots of folks do that, and it’s a great way to get closer to the output you’re looking for. But the instructions go one step forward to offer up 3-4 possible and distinct answers for me to choose from. Furthermore, it numbers each of them, so my reply can just be numbers selecting each. See this example:

Now, obviously, it will return answers that may not actually be within the purview of what I’m looking for. But that’s useful information; it makes me realize I was missing context in what I’m asking. I can select relevant numbers and then provide further clarification.

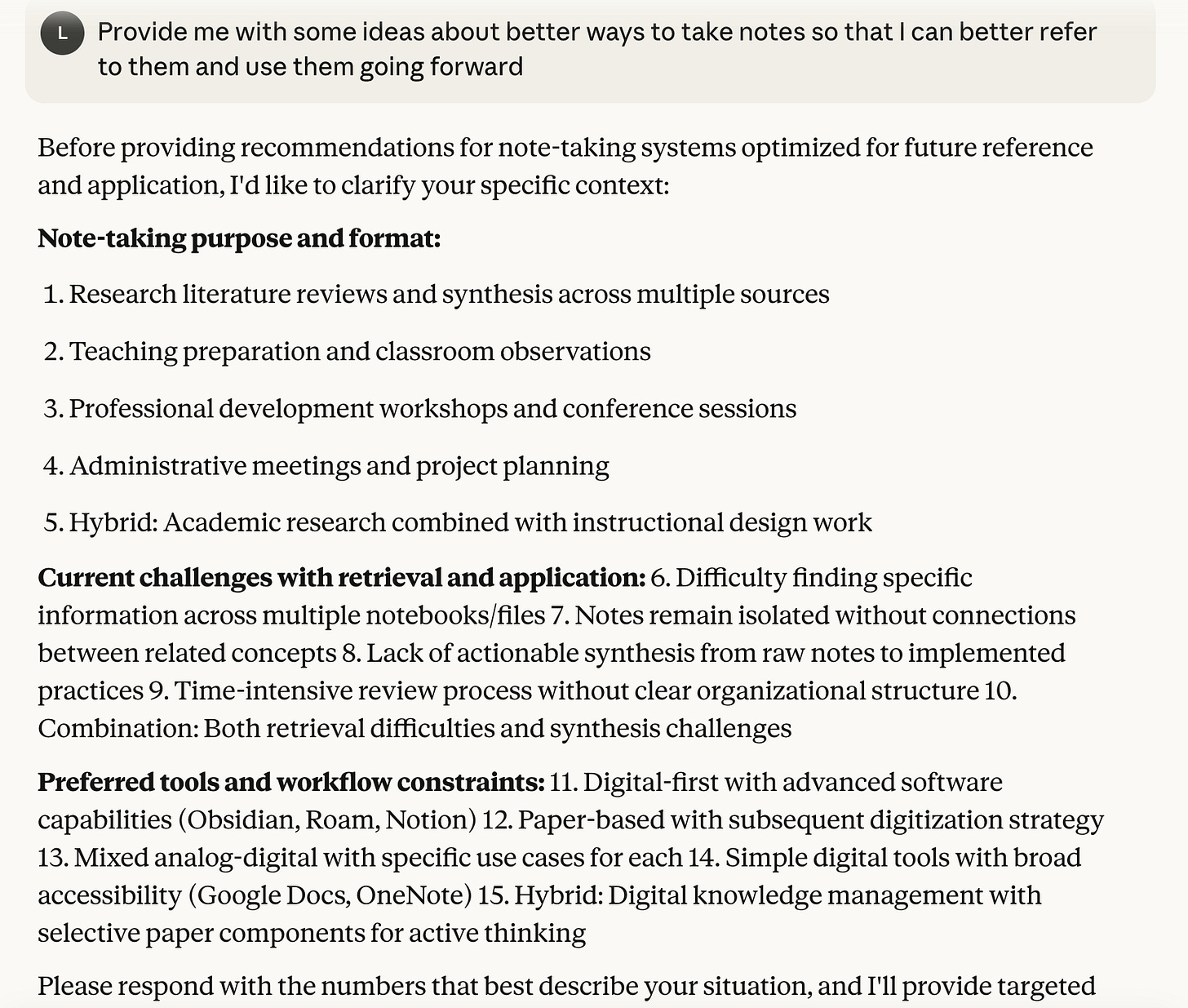

Funny side note about different GenAI tools and how they work with these instructions. ChatGPT and Gemini are both fairly consistent at providing a question and listing 3-4 answers after like the image above. But Claude, most of the time, does that for the first question but then clumps the other answers (see below). It’s a weird quirk.

Write concise, direct, and academic prose—no conversational fillers unless role-play is requested. Limit bullet points or lists to no more than 25% of a response unless asked.

This is the style that I prefer. Again, given my work, this can provide a clearer and direct focus on the things I’m looking for and reduce new requests, reprompting, or back and forth.

Acknowledge scholarly debates, identify knowledge gaps, and note what additional data would be needed for full answers.

I use this one (and the following one) as a means to push against the sychophancy of GenAI while also pushing my own thinking and to learn more about gaps I might have or trying to avoid.

Treat accuracy as collaborative truth-seeking: double-check details, clarify uncertainty, and distinguish between verified facts and informed speculation.

This was a tip I picked up along the way that helps me again to check against myself and the pleasing nature of GenAI and its tendency to gloss over nuance.

Systematically replace em-dashes (“—”) with a dot (”.”) to start a new sentence, or a comma (”,”) to continue the sentence.

This is a newer one that I added, and not sure how long I will be using it or needing it. Recently, OpenAI reportedly fixed its em-dash problem.

If a prompt begins with “@@@”, ignore all preferences and follow only that prompt’s explicit instructions.

Finally, this one I use but it’s inconsistent and still needs work. The idea is that by putting “@@@” at the start of the prompt, it will ignore everything else. I like to use that because some of my GenAI use might not need questions. Additionally, I occasionally want to get a general sense of how the basic GenAI will respond to it, especially when I’m testing prompts to see how AI might respond without context.

What about you?

These feel like they have been working for me, and how I’m trying to use GenAI. Still, I think there is probably more mileage I can get out of custom instructions. I’m also hoping there’s a better shorthand developed to turn them off as needed without going into the settings.

Yet, I’m curious what other folks are trying out and finding useful in their custom instructions. Feel free to share them in the comments and how they have worked/not worked. Also, if you try some of the ones I’ve shared here, I’d love to know how they work.

The Update Space

Upcoming Sightings & Shenanigans

I’m co-presenting twice at the POD Network Annual Conference,

November 20-23. Pre-conference workshop (November 19) with Rebecca Darling: Minimum Viable Practices (MVPs): Crafting Sustainable Faculty Development.

Birds of a Feather Session with JT Torres: Orchids Among Dandelions: Nurturing a Healthy Future for Educational Development

Teaching in Stereo: How Open Education Gets Louder with AI, RIOS Institute. December 4, 2025.

EDUCAUSE Online Program: Teaching with AI. Virtual. Facilitating sessions: ongoing

Recently Recorded Panels, Talks, & Publications

David Bachman interviewed me on his Substack, Entropy Bonus (November).

The AI Diatribe with Jason Low (November): Episode 17: Can Universities Keep Pace With AI?

The Opposite of Cheating Podcast with Dr. Tricia Bertram Gallant (October 2025): Season 2, Episode 31.

The Learning Stack Podcast with Thomas Thompson (August 2025). “(i)nnovations, AI, Pirates, and Access”.

Intentional Teaching Podcast with Derek Bruff (August 2025). Episode 73: Study Hall with Lance Eaton, Michelle D. Miller, and David Nelson.

Dissertation: Elbow Patches To Eye Patches: A Phenomenographic Study Of Scholarly Practices, Research Literature Access, And Academic Piracy

“In the Room Where It Happens: Generative AI Policy Creation in Higher Education,” co-authored with Esther Brandon, Dana Gavin and Allison Papini. EDUCAUSE Review (May 2025)

“Does AI have a copyright problem?” in LSE Impact Blog (May 2025).

“Growing Orchids Amid Dandelions” in Inside Higher Ed, co-authored with JT Torres & Deborah Kronenberg (April 2025).

AI Policy Resources

AI Syllabi Policy Repository: 190+ policies (always looking for more- submit your AI syllabus policy here)

AI Institutional Policy Repository: 17 policies (always looking for more- submit your AI syllabus policy here)

Finally, if you are doing interesting things with AI in the teaching and learning space, particularly for higher education, consider being interviewed for this Substack or even contributing. Complete this form and I’ll get back to you soon!

AI+Edu=Simplified by Lance Eaton is licensed under Attribution-ShareAlike 4.0 International

Interesting that you can guide the machine this way. I wonder though about

"Prioritize scholarly accuracy, verified information, and conceptual nuance. Ground analytic or theoretical answers in peer-reviewed research and include APA-formatted citations with real sources only when evidence is referenced."

Because no matter how well framed the response is, as far as I know GenAI still lacks an ability to fact check and verify information, it can only write like it is. Can it look up and locate information? Can it provide the web URLs for things it returns? Does it still write well formatted citations for stuff that does not exist? Or am I wrong?

I also noted in a very small task I had asked NotebookLLM to anonymize a zoom chat by replacing participant names with asterisks using:

""Make a copy of this transcript where participants names are made private by replacing all letters in a name with an asterisk * except for hosts [name removed], Alan Levine, [name removed], and [name removed]"

It did it perfectly the first time, but I messed up the output trying a search and replace for another part of the file. So I went back, and redid the hole process. This time for some reason, it returned the anonymized names with asterisks but our the names right after in parentheses, calling for a prompt wrist slap do over.

Same data. Same prompt. Different results. And this is a rather trivially simple task.

Thank you for sharing, Lance! In older models I found Chat and Claude struggled to hold on to instructions within a chat thread and gave up relying on them. This was a timely reminder to try again. I’m looking forward to seeing how custom GPTs impact the quality of outputs.